Understanding Equal-cost Multi-path Routing (ECMP)

Welcome back to Continuous Improvement, where we dive deep into the tech that keeps our world running smoothly. I'm your host, Victor Leung, and today we're unpacking a game-changing strategy in the world of network management—Equal-cost Multi-path (ECMP) Routing. This approach is revolutionizing how data travels across networks, optimizing both efficiency and reliability. So, if you've ever wondered about the best ways to manage network traffic, today's episode is for you.

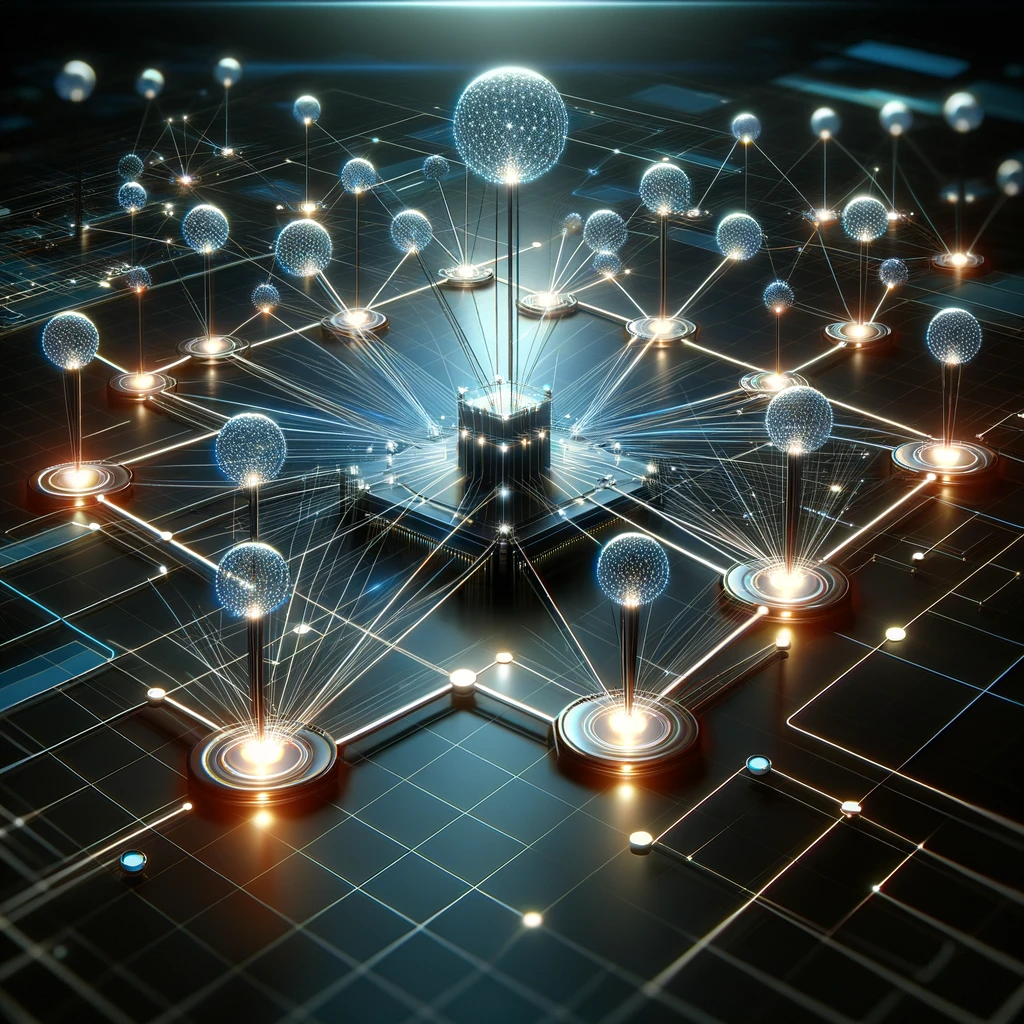

Let’s start with the basics. ECMP stands for Equal-cost Multi-path Routing. Unlike traditional routing, which sends all traffic along a single path, ECMP allows data to be distributed across multiple paths that have the same cost. This could be based on metrics like hop count, bandwidth, or delay.

So, how does ECMP work? Imagine you have several roads from your home to your office. All roads take the same time, and you decide each morning which one to take based on traffic. Similarly, ECMP uses algorithms to choose among multiple efficient routes, balancing the network load and avoiding bottlenecks.

The beauty of ECMP lies in its simplicity and effectiveness. It uses hashing algorithms that take into account factors like IP addresses and port numbers, ensuring traffic is evenly distributed. This not only prevents any single path from being overwhelmed but also significantly enhances overall network throughput.

Now, let’s talk benefits. First off, ECMP maximizes bandwidth utilization by aggregating the bandwidth across available paths. More paths mean more bandwidth, which translates to better performance and faster data delivery.

Next, there’s increased redundancy. Since ECMP doesn’t rely on a single path, the failure of one route doesn’t bring down your network. Traffic smoothly reroutes to the remaining paths, maintaining network uptime and ensuring continuous service availability.

And we can’t forget about scalability. As networks grow and more paths become available, ECMP can easily integrate these new routes without needing a major overhaul. This makes it an ideal strategy for expanding networks in places like data centers and cloud environments.

But, no system is without its challenges. One issue with ECMP is the potential for out-of-order packet delivery, as different paths might have slightly different latencies. This is something network engineers need to monitor, especially for applications that are sensitive to the order in which packets arrive.

In closing, Equal-cost Multi-path Routing is a powerful tool in modern network management, enabling not just more efficient traffic distribution but also adding robustness and flexibility to network infrastructure. Whether you’re in a data center, managing an enterprise network, or even streaming the latest games or movies, ECMP can significantly enhance your network’s performance.

Thanks for tuning in to Continuous Improvement. Today we navigated the complex but crucial world of ECMP, uncovering how it keeps our data flowing reliably and efficiently. Join me next time as we continue to explore the technologies that improve our lives and work. I'm Victor Leung, urging you to keep learning, keep growing, and keep connecting.